About a year ago, our friends at TokBox published a blog post entitled WebRTC and Signaling: What Two Years Has Taught Us. It's a great overview of their experience with technologies like SIP and XMPP for stitching together WebRTC endpoints. And we couldn't agree more with their observation that trying to settle on one signaling method for WebRTC would have been even more contentious than the endless video codec debates. However, our experience with XMPP differs enough from theirs that we thought it would be good to set our thoughts down in writing.

First, we're always a bit suspicious when someone says they abandoned XMPP because it didn't scale for them. As a general rule, protocols don't scale - implementations do. We know of several XMPP server implementations that can route, distribute, and deliver tens of thousands of messages per second without breaking a sweat. After all, that's what a messaging server is designed to do. Sure, some server implementations don't scale up that high; the answer is to use a better server. We're partial to Prosody, but Tigase and a few others are well worth researching, too.

Second, pure messaging is the easy part. The hard part is defining the payloads for those messages to build out a wide variety of features and functionality. What we like best about the XMPP community is that over the last 15 years they have defined just about everything you need for a modern collaboration system: presence, contact lists, 1:1 messaging, group messaging, pubsub notifications, service discovery, device capabilities, strong authentication and encryption, audio/video session negotiation, file transfer, you name it. Why put a lot of work into recreating those wheels when they're ready-made for you?

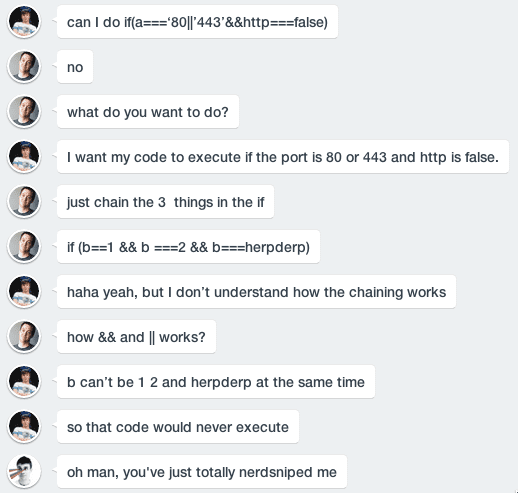

An added benefit of having so many building blocks is that it's straightforward to put them all together in productive ways. For example, XMPP includes extensions for both multi-user chat ("MUC") and multimedia session management (Jingle). If we need multiparty signaling for video conferencing, we can easily combine the two to create what we need. Plus we get a number of advanced solutions for free this way, since MUC includes in-room presence along with a helpful authorization model and Jingle supports helpful features like renegotiation and file transfer. Not to mention that the ability to communicate device capabilities in XMPP enables us to avoid monstrosities like SDP bundling.